If you find Complexity Thoughts interesting, follow us! Click on the Like button, leave a comment, repost on Substack or share this post. It is the only feedback I can have for this free service. The frequency and quality of this newsletter relies on social interactions. Thank you!

Dall-e 3 representation of this issue’s content

Foundations of network science and complex systems

Order parameter dynamics in complex systems: From models to data

A well-organized review.

Collective ordering behaviors are typical macroscopic manifestations embedded in complex systems and can be ubiquitously observed across various physical backgrounds. Elements in complex systems may self-organize via mutual or external couplings to achieve diverse spatiotemporal coordinations. The order parameter, as a powerful quantity in describing the transition to collective states, may emerge spontaneously from large numbers of degrees of freedom through competitions. In this minireview, we extensively discussed the collective dynamics of complex systems from the viewpoint of order-parameter dynamics. A synergetic theory is adopted as the foundation of order-parameter dynamics, and it focuses on the self-organization and collective behaviors of complex systems. At the onset of macroscopic transitions, slow modes are distinguished from fast modes and act as order parameters, whose evolution can be established in terms of the slaving principle. We explore order-parameter dynamics in both model-based and data-based scenarios. For situations where microscopic dynamics modeling is available, as prototype examples, synchronization of coupled phase oscillators, chimera states, and neuron network dynamics are analytically studied, and the order-parameter dynamics is constructed in terms of reduction procedures such as the Ott–Antonsen ansatz, the Lorentz ansatz, and so on. For complicated systems highly challenging to be well modeled, we proposed the eigen-microstate approach (EMP) to reconstruct the macroscopic order-parameter dynamics, where the spatiotemporal evolution brought by big data can be well decomposed into eigenmodes, and the macroscopic collective behavior can be traced by Bose–Einstein condensation-like transitions and the emergence of dominant eigenmodes. The EMP is successfully applied to some typical examples, such as phase transitions in the Ising model, climate dynamics in earth systems, fluctuation patterns in stock markets, and collective motion in living systems.

In discrete-state Markovian systems, many important properties of correlation functions and relaxation dynamics depend on the spectrum of the rate matrix. Here we demonstrate the existence of a universal trade-off between thermodynamic and spectral properties. We show that the entropy production rate, the fundamental thermodynamic cost of a nonequilibrium steady state, bounds the difference between the eigenvalues of a nonequilibrium rate matrix and a reference equilibrium rate matrix. Using this result, we derive thermodynamic bounds on the spectral gap, which governs autocorrelation times and the speed of relaxation to a steady state. We also derive the thermodynamic bounds on the imaginary eigenvalues, which govern the speed of oscillations. We illustrate our approach using a simple model of biomolecular sensing.

Spontaneous emergence of groups and signaling diversity in dynamic networks

We study the coevolution of network structure and signaling behavior. We model agents who can preferentially associate with others in a dynamic network while they also learn to play a simple sender-receiver game. We have four major findings. First, signaling interactions in dynamic networks are sufficient to cause the endogenous formation of distinct signaling groups, even in an initially homogeneous population. Second, dynamic networks allow the emergence of novel hybrid signaling groups that do not converge on a single common signaling system but are instead composed of different yet complementary signaling strategies. We show that the presence of these hybrid groups promotes stable diversity in signaling among other groups in the population. Third, we find important distinctions in information processing capacity of different groups: hybrid groups diffuse information more quickly initially but at the cost of taking longer to reach all group members. Fourth, our findings pertain to all common interest signaling games, are robust across many parameters, and mitigate known problems of inefficient communication.

Neuroscience

Studies of mechanisms of adaptive behavior generally focus on neurons and circuits. But adaptive behavior also depends on interactions among the nervous system, body and environment: sensory preprocessing and motor post-processing filter inputs to and outputs from the nervous system; co-evolution and co-development of nervous system and periphery create matching and complementarity between them; body structure creates constraints and opportunities for neural control; and continuous feedback between nervous system, body and environment are essential for normal behavior. This broader view of adaptive behavior has been a major underpinning of ecological psychology and has influenced behavior-based robotics. Computational neuroethology, which jointly models neural control and periphery of animals, is a promising methodology for understanding adaptive behavior.

Neurophysiological signatures of cortical micro-architecture

Systematic spatial variation in micro-architecture is observed across the cortex. These micro-architectural gradients are reflected in neural activity, which can be captured by neurophysiological time-series. How spontaneous neurophysiological dynamics are organized across the cortex and how they arise from heterogeneous cortical micro-architecture remains unknown. Here we extensively profile regional neurophysiological dynamics across the human brain by estimating over 6800 time-series features from the resting state magnetoencephalography (MEG) signal. We then map regional time-series profiles to a comprehensive multi-modal, multi-scale atlas of cortical micro-architecture, including microstructure, metabolism, neurotransmitter receptors, cell types and laminar differentiation. We find that the dominant axis of neurophysiological dynamics reflects characteristics of power spectrum density and linear correlation structure of the signal, emphasizing the importance of conventional features of electromagnetic dynamics while identifying additional informative features that have traditionally received less attention. Moreover, spatial variation in neurophysiological dynamics is co-localized with multiple micro-architectural features, including gene expression gradients, intracortical myelin, neurotransmitter receptors and transporters, and oxygen and glucose metabolism. Collectively, this work opens new avenues for studying the anatomical basis of neural activity.

How critical is brain criticality?

we review these emerging trends in criticality neuroscience, highlighting new data pertaining to the edge of chaos and near-criticality, and making a case for the distance to criticality as a useful metric for probing cognitive states and mental illness

Empirical and theoretical work suggests that the brain operates at the edge of a critical phase transition between order and disorder. The wider adoption and investigation of criticality theory as a unifying framework in neuroscience has been hindered in part by the potentially daunting complexity of its analytical and theoretical foundation.

Among critical phase transitions, avalanche and edge of chaos criticality are particularly relevant to studying brain function and dysfunction. The computational features of criticality provide a conceptual link between neuronal dynamics and cognition. Mounting evidence suggests that near-criticality, more than strict criticality, may be a more plausible mode of operation for the brain.

The distance to criticality presents a promising and underexploited biological parameter for characterizing cognitive differences and mental illness.

Ecology and Evolution

Complex adaptations and the evolution of evolvability

I have discovered this paper thanks to a discussion with Ricard Solè (thanks, Ricard). It is extremely fascinating, and it provides an important point: evolution in computer science is not exactly a digital counterpart of evolution in biology.

The paper comes with a lot of great questions. I could not find definitive answers, almost 30 years later: if you can point to papers solving one of the points in the abstract, please share with us.

However, even if the fact and the importance of modularity seems to be widely appreciated, there is little understanding of what selective forces have generated genetic and developmental modularity

The problem of complex adaptations is studied in two largely disconnected research traditions: evolutionary biology and evolutionary computer science. This paper summarizes the results from both areas and compares their implications. In evolutionary computer science it was found that the Darwinian process of mutation, recombination and selection is not universally effective in improving complex systems like computer programs or chip designs. For adaptation to occur, these systems must possess “evolvability,” i.e., the ability of random variations to sometimes produce improvement. It was found that evolvability critically depends on the way genetic variation maps onto phenotypic variation, an issue known as the representation problem. The genotype‐phenotype map determines the variability of characters, which is the propensity to vary. Variability needs to be distinguished from variations, which are the actually realized differences between individuals. The genotype‐phenotype map is the common theme underlying such varied biological phenomena as genetic canalization, developmental constraints, biological versatility, developmental dissociability, and morphological integration. For evolutionary biology the representation problem has important implications: how is it that extant species acquired a genotype‐phenotype map which allows improvement by mutation and selection? Is the genotype‐phenotype map able to change in evolution? What are the selective forces, if any, that shape the genotype‐phenotype map? We propose that the genotype‐phenotype map can evolve by two main routes: epistatic mutations, or the creation of new genes. A common result for organismic design is modularity. By modularity we mean a genotype‐phenotype map in which there are few pleiotropic effects among characters serving different functions, with pleiotropic effects falling mainly among characters that are part of a single functional complex. Such a design is expected to improve evolvability by limiting the interference between the adaptation of different functions. Several population genetic models are reviewed that are intended to explain the evolutionary origin of a modular design. While our current knowledge is insufficient to assess the plausibility of these models, they form the beginning of a framework for understanding the evolution of the genotype‐phenotype map.

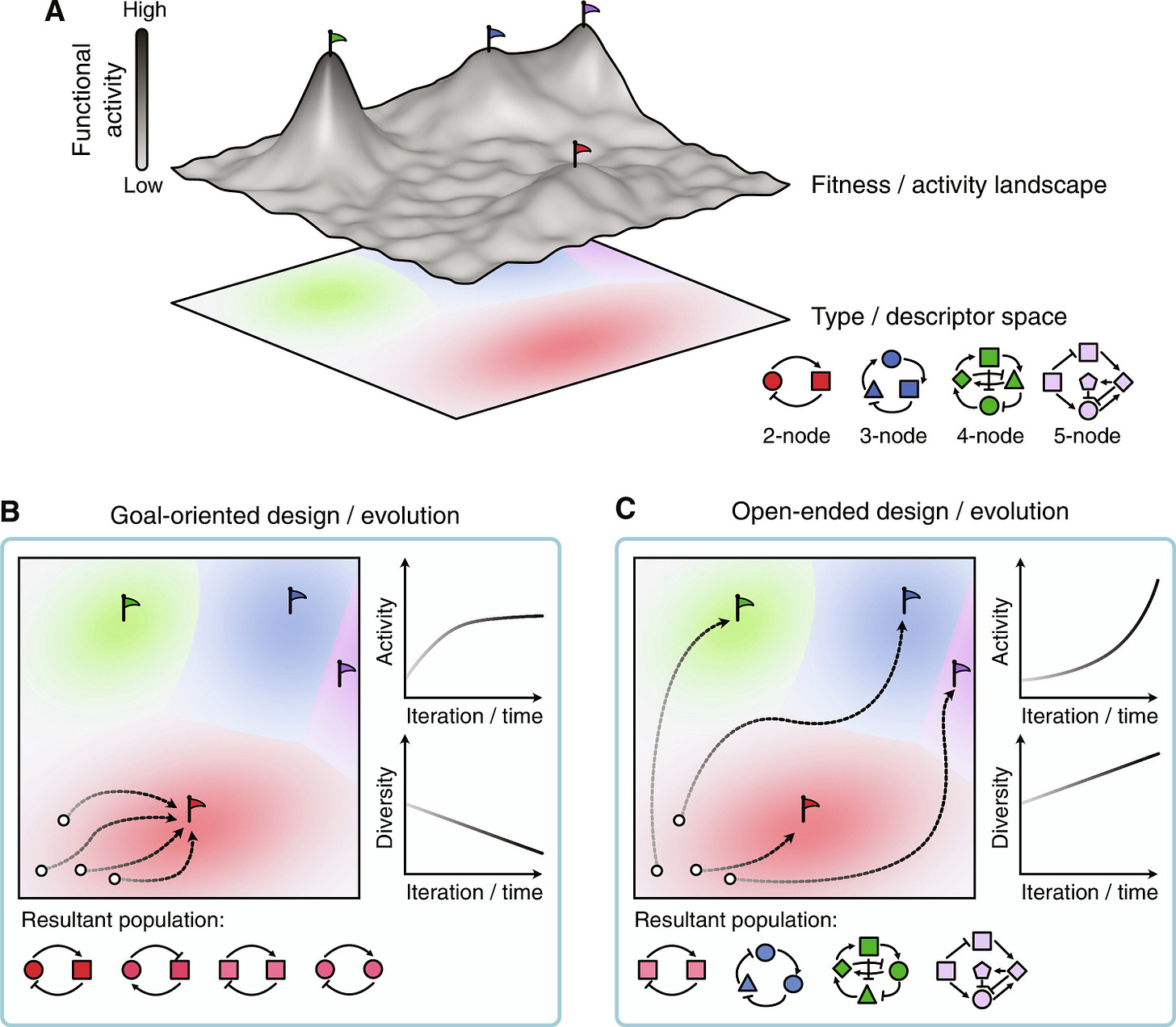

Open-endedness in synthetic biology: A route to continual innovation for biological design

Before reading this one, it might be useful to check the paper by Banzhaf et al (2016) where there is an explanation of the three levels in which entities are organized:

Implementing evolution as a biodesign principle, such as enhancing an entity’s evolvability, also raises substantial concerns regarding biosecurity and unintended consequences

Design in synthetic biology is typically goal oriented, aiming to repurpose or optimize existing biological functions, augmenting biology with new-to-nature capabilities, or creating life-like systems from scratch. While the field has seen many advances, bottlenecks in the complexity of the systems built are emerging and designs that function in the lab often fail when used in real-world contexts. Here, we propose an open-ended approach to biological design, with the novelty of designed biology being at least as important as how well it fulfils its goal. Rather than solely focusing on optimization toward a single best design, designing with novelty in mind may allow us to move beyond the diminishing returns we see in performance for most engineered biology. Research from the artificial life community has demonstrated that embracing novelty can automatically generate innovative and unexpected solutions to challenging problems beyond local optima. Synthetic biology offers the ideal playground to explore more creative approaches to biological design.

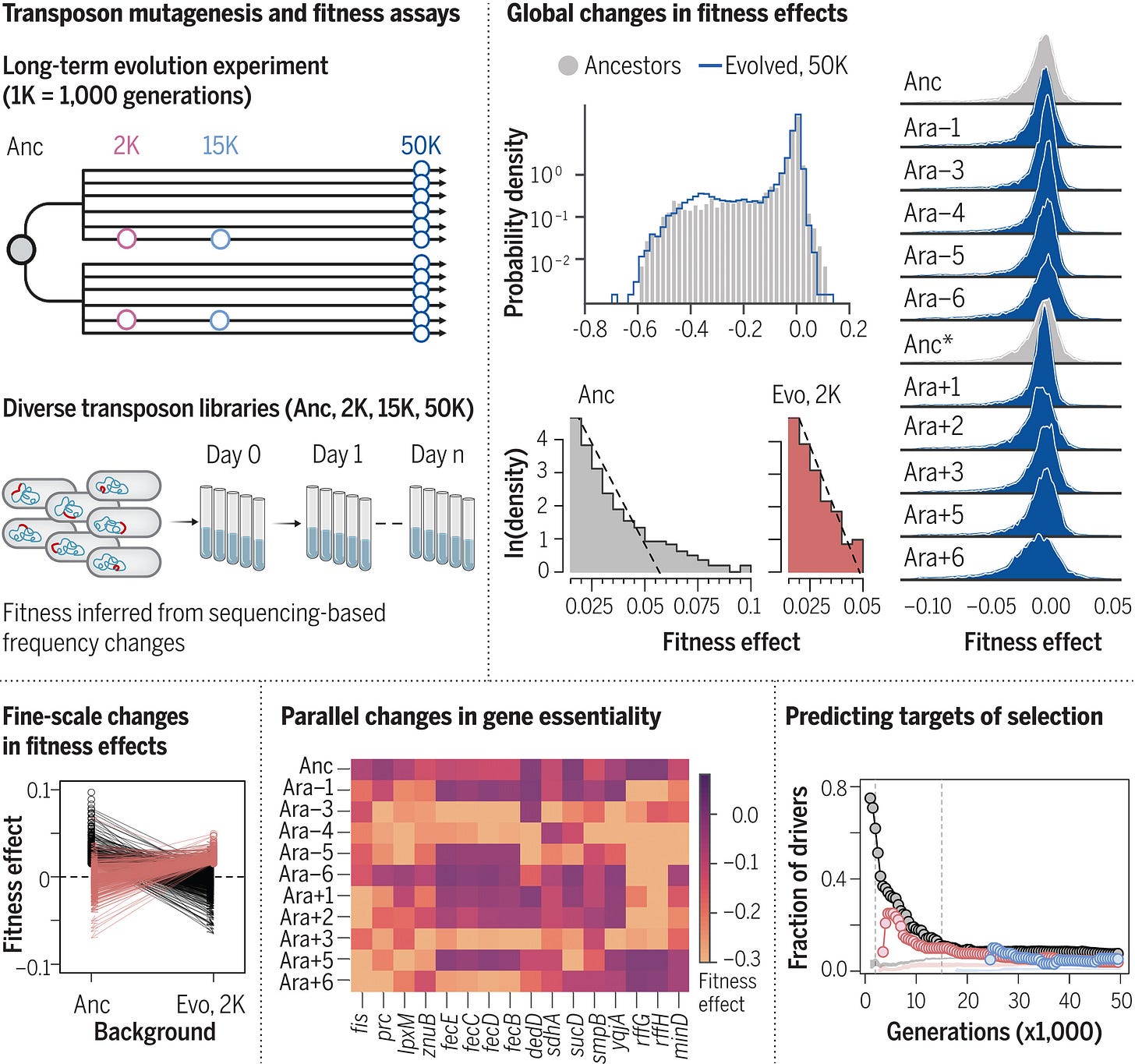

Changing fitness effects of mutations through long-term bacterial evolution

Consequently, some evolutionary paths that were inaccessible to the ancestor became accessible to the evolving populations, while others were closed off

The distribution of fitness effects of new mutations shapes evolution, but it is challenging to observe how it changes as organisms adapt. Using Escherichia coli lineages spanning 50,000 generations of evolution, we quantify the fitness effects of insertion mutations in every gene. Macroscopically, the fraction of deleterious mutations changed little over time whereas the beneficial tail declined sharply, approaching an exponential distribution. Microscopically, changes in individual gene essentiality and deleterious effects often occurred in parallel; altered essentiality is only partly explained by structural variation. The identity and effect sizes of beneficial mutations changed rapidly over time, but many targets of selection remained predictable because of the importance of loss-of-function mutations. Taken together, these results reveal the dynamic—but statistically predictable—nature of mutational fitness effects.

Phenotypic Plasticity in the Interactions and Evolution of Species

the (co)evolution of species interactions has certainly resulted in phenotypic plasticity

When individuals of two species interact, they can adjust their phenotypes in response to their respective partner, be they antagonists or mutualists. The reciprocal phenotypic change between individuals of interacting species can reflect an evolutionary response to spatial and temporal variation in species interactions and ecologically result in the structuring of food chains. The evolution of adaptive phenotypic plasticity has led to the success of organisms in novel habitats, and potentially contributes to genetic differentiation and speciation. Taken together, phenotypic responses in species interactions represent modifications that can lead to reciprocal change in ecological time, altered community patterns, and expanded evolutionary potential of species.

Evolution of biological cooperation: an algorithmic approach

classical models from algorithm theory and mathematical methods from statistical mechanics, originally developed for neural networks, spin glasses, and Boolean satisfaction problems, can provide insights into the emergence of new forms of cooperation in life

This manuscript presents an algorithmic approach to cooperation in biological systems, drawing on fundamental ideas from statistical mechanics and probability theory. Fisher’s geometric model of adaptation suggests that the evolution of organisms well adapted to multiple constraints comes at a significant complexity cost. By utilizing combinatorial models of fitness, we demonstrate that the probability of adapting to all constraints decreases exponentially with the number of constraints, thereby generalizing Fisher’s result. Our main focus is understanding how cooperation can overcome this adaptivity barrier. Through these combinatorial models, we demonstrate that when an organism needs to adapt to a multitude of environmental variables, division of labor emerges as the only viable evolutionary strategy.