If you find Complexity Thoughts interesting, follow us! Click on the Like button, leave a comment, repost on Substack or share this post. It is the only feedback I can have for this free service. The frequency and quality of this newsletter relies on social interactions. Thank you!

Dall-e 3 representation of this issue’s content

Foundations of network science and complex systems

Nonreciprocal Frustration: Time Crystalline Order-by-Disorder Phenomenon and a Spin-Glass-like State

Active systems are composed of constituents with interactions that are generically nonreciprocal in nature. Such nonreciprocity often gives rise to situations where conflicting objectives exist, such as in the case of a predator pursuing its prey, while the prey attempts to evade capture. This situation is somewhat reminiscent of those encountered in geometrically frustrated systems where conflicting objectives also exist, which result in the absence of configurations that simultaneously minimize all interaction energies. In the latter, a rich variety of exotic phenomena are known to arise due to the presence of accidental degeneracy of ground states. Here, we establish a direct analogy between these two classes of systems. The analogy is based on the observation that nonreciprocally interacting systems with antisymmetric coupling and geometrically frustrated systems have in common that they both exhibit marginal orbits, which can be regarded as a dynamical system counterpart of accidentally degenerate ground states. The former is shown by proving a Liouville-type theorem. These “accidental degeneracies” of orbits are shown to often get “lifted” by stochastic noise or weak random disorder due to the emergent “entropic force” to give rise to a noise-induced spontaneous symmetry breaking, in a similar manner to the order-by-disorder phenomena known to occur in geometrically frustrated systems. Furthermore, we report numerical evidence of a nonreciprocity-induced spin-glass-like state that exhibits a short-ranged spatial correlation (with stretched exponential decay) and an algebraic temporal correlation associated with the aging effect. Our work establishes an unexpected connection between the physics of complex magnetic materials and nonreciprocal matter, offering a fresh and valuable perspective for comprehending the latter.

Organisms Need Mechanisms; Mechanisms Need Organisms

According to new mechanists, mechanisms explain how specific biological phenomena are produced. New mechanists have had little to say about how mechanisms relate to the organism in which they reside. A key feature of organisms, emphasized by the autonomy tradition, is that organisms maintain themselves. To do this, they rely on mechanisms. But mechanisms must be controlled so that they produce the phenomena for which they are responsible when and in the manner needed by the organism. To account for how they are controlled, we characterize mechanisms as sets of constraints on the flow of free energy. Some constraints are flexible and can be acted on by other mechanisms, control mechanisms, that utilize information procured from the organism and its environment to alter the flexible constraints in other mechanisms so that they produce phenomena appropriate to the circumstances. We further show that control mechanisms in living organisms are organized heterarchically—control is carried out primarily by local controllers that integrate information they acquire as well as that which they procure from other control mechanisms. The result is not a hierarchy of control but an integrated network of control mechanisms that has been crafted over the course of evolution.

Biological Systems

Systems biology of stem cell fate and cellular reprogramming

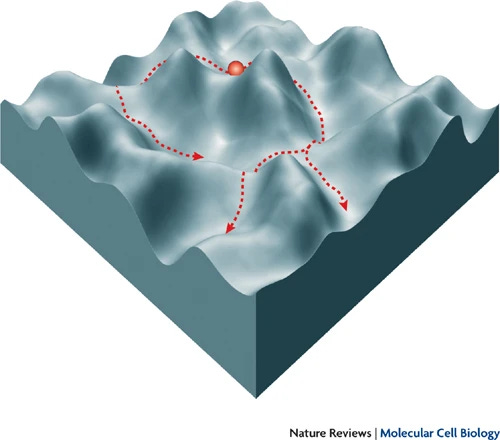

Stem cell differentiation and the maintenance of self-renewal are intrinsically complex processes that require the coordinated dynamic expression of hundreds of genes and proteins in precise response to external signalling cues. As computational tools can help identify patterns and elucidate structure in complex datasets, they are now beginning to be used in stem cell research to better understand this complexity. The representation of complex molecular regulatory interactions as networks is useful in conceptualizing this complexity. However, it is difficult to relate network architecture to cell behaviour in a quantitative way. The collective behaviour of complex regulatory networks can be explored by using techniques from dynamical systems theory and analysing cell types associated with attractors of underlying regulatory networks. Robust heterogeneity at the population level can arise from stochastic transitions between coexisting attractors driven by widespread molecular noise. Cellular reprogramming corresponds to navigation through a complex noisy attractor landscape. Understanding the relationships between stochasticity and determinism in defining cell fate might help decipher the molecular regulatory mechanisms of cellular reprogramming.

Neuroscience

Keeping Your Brain in Balance: Homeostatic Regulation of Network Function

To perform computations with the efficiency necessary for animal survival, neocortical microcircuits must be capable of reconfiguring in response to experience, while carefully regulating excitatory and inhibitory connectivity to maintain stable function. This dynamic fine-tuning is accomplished through a rich array of cellular homeostatic plasticity mechanisms that stabilize important cellular and network features such as firing rates, information flow, and sensory tuning properties. Further, these functional network properties can be stabilized by different forms of homeostatic plasticity, including mechanisms that target excitatory or inhibitory synapses, or that regulate intrinsic neuronal excitability. Here we discuss which aspects of neocortical circuit function are under homeostatic control, how this homeostasis is realized on the cellular and molecular levels, and the pathological consequences when circuit homeostasis is impaired. A remaining challenge is to elucidate how these diverse homeostatic mechanisms cooperate within complex circuits to enable them to be both flexible and stable.

Human behavior

Bayesianism and wishful thinking are compatible

Bayesian principles show up across many domains of human cognition, but wishful thinking—where beliefs are updated in the direction of desired outcomes rather than what the evidence implies—seems to threaten the universality of Bayesian approaches to the mind. In this Article, we show that Bayesian optimality and wishful thinking are, despite first appearances, compatible. The setting of opposing goals can cause two groups of people with identical prior beliefs to reach opposite conclusions about the same evidence through fully Bayesian calculations. We show that this is possible because, when people set goals, they receive privileged information in the form of affective experiences, and this information systematically supports goal-consistent conclusions. We ground this idea in a formal, Bayesian model in which affective prediction errors drive wishful thinking. We obtain empirical support for our model across five studies.

Machine Learning & Artificial intelligence

Automated discovery of algorithms from data

Big promises from this paper.

→ Click here for a read-only access

To automate the discovery of new scientific and engineering principles, artificial intelligence must distill explicit rules from experimental data. This has proven difficult because existing methods typically search through the enormous space of possible functions. Here we introduce deep distilling, a machine learning method that does not perform searches but instead learns from data using symbolic essence neural networks and then losslessly condenses the network parameters into a concise algorithm written in computer code. This distilled code, which can contain loops and nested logic, is equivalent to the neural network but is human-comprehensible and orders-of-magnitude more compact. On arithmetic, vision and optimization tasks, the distilled code is capable of out-of-distribution systematic generalization to solve cases orders-of-magnitude larger and more complex than the training data. The distilled algorithms can sometimes outperform human-designed algorithms, demonstrating that deep distilling is able to discover generalizable principles complementary to human expertise.

Initialization is critical for preserving global data structure in both t-SNE and UMAP

Some methods are routinely used to embed large-scale data, with the goal of finding meaningful (geometric) patterns. For instance, this is a widespread approach for the analysis of single-cell data:

However, a blind application of these methods (as usual, for any method) might lead to undesired outcomes:

One of the most ubiquitous analysis tools in single-cell transcriptomics and cytometry is t-distributed stochastic neighbor embedding (t-SNE)1, which is used to visualize individual cells as points on a two-dimensional scatterplot such that similar cells are positioned close together2. A related algorithm, called uniform manifold approximation and projection (UMAP)3, has attracted substantial attention in the single-cell community4. In Nature Biotechnology, Becht et al.4 argued that UMAP is preferable to t-SNE because it better preserves the global structure of the data and is more consistent across runs. Here we show that this alleged superiority of UMAP can be entirely attributed to different choices of initialization in the implementations used by Becht et al.: the t-SNE implementations by default used random initialization, while the UMAP implementation used a technique called Laplacian eigenmaps (LE)5 to initialize the embedding. We show that UMAP with random initialization preserves global structure as poorly as t-SNE with random initialization, while t-SNE with informative initialization performs as well as UMAP with informative initialization. On the basis of these observations, we argue that there is currently no evidence that the UMAP algorithm per se has any advantage over t-SNE in terms of preserving global structure. We also contend that these algorithms should always use informative initialization by default.

Learning the intrinsic dynamics of spatio-temporal processes through Latent Dynamics Networks

Predicting the evolution of systems with spatio-temporal dynamics in response to external stimuli is essential for scientific progress. Traditional equations-based approaches leverage first principles through the numerical approximation of differential equations, thus demanding extensive computational resources. In contrast, data-driven approaches leverage deep learning algorithms to describe system evolution in low-dimensional spaces. We introduce an architecture, termed Latent Dynamics Network, capable of uncovering low-dimensional intrinsic dynamics in potentially non-Markovian systems. Latent Dynamics Networks automatically discover a low-dimensional manifold while learning the system dynamics, eliminating the need for training an auto-encoder and avoiding operations in the high-dimensional space. They predict the evolution, even in time-extrapolation scenarios, of space-dependent fields without relying on predetermined grids, thus enabling weight-sharing across query-points. Lightweight and easy-to-train, Latent Dynamics Networks demonstrate superior accuracy (normalized error 5 times smaller) in highly-nonlinear problems with significantly fewer trainable parameters (more than 10 times fewer) compared to state-of-the-art methods.

So glad to eventually see papers about this. Here, Jaeger confirms part of what I have written when the AGI hype accompanied the introduction advent of ChatGPT.

What is the prospect of developing artificial general intelligence (AGI)? I investigate this question by systematically comparing living and algorithmic systems, with a special focus on the notion of “agency.” There are three fundamental differences to consider: (1) Living systems are autopoietic, that is, self-manufacturing, and therefore able to set their own intrinsic goals, while algorithms exist in a computational environment with target functions that are both provided by an external agent. (2) Living systems are embodied in the sense that there is no separation between their symbolic and physical aspects, while algorithms run on computational architectures that maximally isolate software from hardware. (3) Living systems experience a large world, in which most problems are ill defined (and not all definable), while algorithms exist in a small world, in which all problems are well defined. These three differences imply that living and algorithmic systems have very different capabilities and limitations. In particular, it is extremely unlikely that true AGI (beyond mere mimicry) can be developed in the current algorithmic framework of AI research. Consequently, discussions about the proper development and deployment of algorithmic tools should be shaped around the dangers and opportunities of current narrow AI, not the extremely unlikely prospect of the emergence of true agency in artificial systems.

Further readings from our archive:

Navigating the transformative potential of generative AI: a complex systems perspective

Recently, I had the chance to have a cold drink in a sunny Venice with two friends and colleagues: Alex Arenas — professor at the Universitat Rovira i Virgili — and Ricard Solè — professor at the Universitat Pompeu Fabra and the Santa Fe Institute. A rare opportunity to discuss about complex systems and hot topics, such as the most recent developments …

From science fiction to science facts: understanding the technological singularity

This short essay follows a previous one about the hype around emergent abilities in AI-powered systems, such as large language models. With the present one, I would like to discuss about another highly debated (potential) effect of emergent abilities in AI systems: the