If you find Complexity Thoughts interesting, follow us! Click on the Like button, leave a comment, repost on Substack or share this post. It is the only feedback I can have for this free service. The frequency and quality of this newsletter relies on social interactions. Thank you!

Foundations of network science and complex systems

Many complex systems—from the Internet to social, biological, and communication networks—are thought to exhibit scale-free structure. However, prevailing explanations require that networks grow over time, an assumption that fails in some real-world settings. Here, we explain how scale-free structure can emerge without growth through network self-organization. Beginning with an arbitrary network, we allow connections to detach from random nodes and then reconnect under a mixture of preferential and random attachment. While the numbers of nodes and edges remain fixed, the degree distribution evolves toward a power-law with an exponent γ=1+1/p that depends only on the proportion p of preferential (rather than random) attachment. Applying our model to several real networks, we infer p directly from data and predict the relationship between network size and degree heterogeneity. Together, these results establish how scale-free structure can arise in networks of constant size and density, with broad implications for the structure and function of complex systems.

Mutual Linearity of Nonequilibrium Network Currents

For continuous-time Markov chains and open unimolecular chemical reaction networks, we prove that any two stationary currents are linearly related upon perturbations of a single edge’s transition rates, arbitrarily far from equilibrium. We extend the result to nonstationary currents in the frequency domain, provide and discuss an explicit expression for the current-current susceptibility in terms of the network topology, and discuss possible generalizations. In practical scenarios, the mutual linearity relation has predictive power and can be used as a tool for inference or model proof testing.

The Ising model celebrates a century of interdisciplinary contributions

Interesting review, featuring several application of this emblematic model to a variety of empirical systems from different domains.

The centennial of the Ising model marks a century of interdisciplinary contributions that extend well beyond ferromagnets, including the evolution of language, volatility in financial markets, mood swings, scientific collaboration, the persistence of unintended neighborhood segregation, and asymmetric hysteresis in political polarization. The puzzle is how anything could be learned about social life from a toy model of second order ferromagnetic phase transitions on a periodic network. Our answer points to Ising’s deeper contribution: a bottom-up modeling approach that explores phase transitions in population behavior that emerge spontaneously through the interplay of individual choices at the micro-level of interactions among network neighbors.

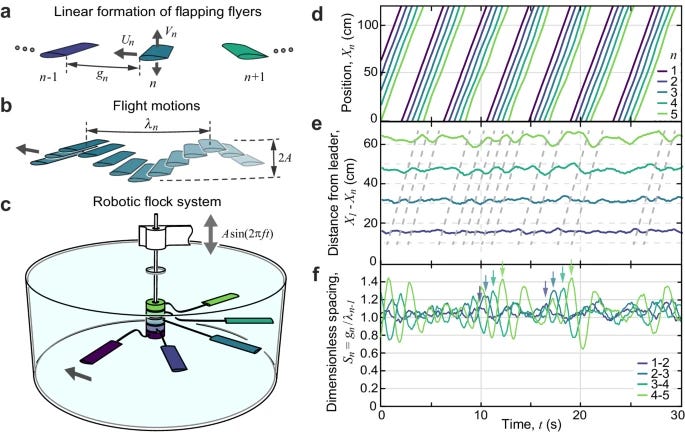

Flow interactions lead to self-organized flight formations disrupted by self-amplifying waves

Collectively locomoting animals are often viewed as analogous to states of matter in that group-level phenomena emerge from individual-level interactions. Applying this framework to fish schools and bird flocks must account for visco-inertial flows as mediators of the physical interactions. Motivated by linear flight formations, here we show that pairwise flow interactions tend to promote crystalline or lattice-like arrangements, but such order is disrupted by unstably growing positional waves. Using robotic experiments on “mock flocks” of flapping wings in forward flight, we find that followers tend to lock into position behind a leader, but larger groups display flow-induced oscillatory modes – “flonons” – that grow in amplitude down the group and cause collisions. Force measurements and applied perturbations inform a wake interaction model that explains the self-ordering as mediated by spring-like forces and the self-amplification of disturbances as a resonance cascade. We further show that larger groups may be stabilized by introducing variability among individuals, which induces positional disorder while suppressing flonon amplification. These results derive from generic features including locomotor-flow phasing and nonreciprocal interactions with memory, and hence these phenomena may arise more generally in macroscale, flow-mediated collectives.

Flocking, as paradigmatically exemplified by birds, is the coherent collective motion of active agents. As originally conceived, flocking emerges through alignment interactions between the agents. Here, we report that flocking can also emerge through interactions that turn agents away from each other. Combining simulations, kinetic theory, and experiments, we demonstrate this mechanism of flocking in self-propelled Janus colloids with stronger repulsion on the front than on the rear. The polar state is stable because particles achieve a compromise between turning away from left and right neighbors. Unlike for alignment interactions, the emergence of polar order from turn-away interactions requires particle repulsion. At high concentration, repulsion produces flocking Wigner crystals. Whereas repulsion often leads to motility-induced phase separation of active particles, here it combines with turn-away torques to produce flocking. Therefore, our findings bridge the classes of aligning and nonaligning active matter. Our results could help to reconcile the observations that cells can flock despite turning away from each other via contact inhibition of locomotion. Overall, our work shows that flocking is a very robust phenomenon that arises even when the orientational interactions would seem to prevent it.

Early Predictor for the Onset of Critical Transitions in Networked Dynamical Systems

The results are interesting, feeding a deep-learning architecture with the dynamics to predict upcoming critical transitions. The authors do not use the network information for this task, but we have recently shown in this paper that using network information can really help to have early-warning signals of systemic collapse.

Could it be interesting to combine the two approaches to gather the most from both structure and dynamics?

Numerous natural and human-made systems exhibit critical transitions whereby slow changes in environmental conditions spark abrupt shifts to a qualitatively distinct state. These shifts very often entail severe consequences; therefore, it is imperative to devise robust and informative approaches for anticipating the onset of critical transitions. Real-world complex systems can comprise hundreds or thousands of interacting entities, and implementing prevention or management strategies for critical transitions requires knowledge of the exact condition in which they will manifest. However, most research so far has focused on low-dimensional systems and small networks containing fewer than ten nodes or has not provided an estimate of the location where the transition will occur. We address these weaknesses by developing a deep-learning framework which can predict the specific location where critical transitions happen in networked systems with size up to hundreds of nodes. These predictions do not rely on the network topology, the edge weights, or the knowledge of system dynamics. We validate the effectiveness of our machine-learning-based framework by considering a diverse selection of systems representing both smooth (second-order) and explosive (first-order) transitions: the synchronization transition in coupled Kuramoto oscillators; the sharp decline in the resource biomass present in an ecosystem; and the abrupt collapse of a Wilson-Cowan neuronal system. We show that our method provides accurate predictions for the onset of critical transitions well in advance of their occurrences, is robust to noise and transient data, and relies only on observations of a small fraction of nodes. Finally, we demonstrate the applicability of our approach to real-world systems by considering empirical vegetated ecosystems in Africa.

Laying down a forking path: Tensions between enaction and the free energy principle

Several authors have made claims about the compatibility between the Free Energy Principle (FEP) and theories of autopoiesis and enaction. Many see these theories as natural partners or as making similar statements about the nature of biological and cognitive systems. We critically examine these claims and identify a series of misreadings and misinterpretations of key enactive concepts. In particular, we notice a tendency to disregard the operational definition of autopoiesis and the distinction between a system’s structure and its organization. Other misreadings concern the conflation of processes of self-distinction in operationally closed systems and Markov blankets. Deeper theoretical tensions underlie some of these misinterpretations. FEP assumes systems that reach a non-equilibrium steady state and are enveloped by a Markov blanket. We argue that these assumptions contradict the historicity of sense-making that is explicit in the enactive approach. Enactive concepts such as adaptivity and agency are defined in terms of the modulation of parameters and constraints of the agent-environment coupling, which entail the possibility of changes in variable and parameter sets, constraints, and in the dynamical laws affecting the system. This allows enaction to address the path-dependent diversity of human bodies and minds. We argue that these ideas are incompatible with the time invariance of non-equilibrium steady states assumed by the FEP. In addition, the enactive perspective foregrounds the enabling and constitutive roles played by the world in sense-making, agency, development. We argue that this view of transactional and constitutive relations between organisms and environments is a challenge to the FEP. Once we move beyond superficial similarities, identify misreadings, and examine the theoretical commitments of the two approaches, we reach the conclusion that far from being easily integrated, the FEP, as it stands formulated today, is in tension with the theories of autopoiesis and enaction.

Evolution

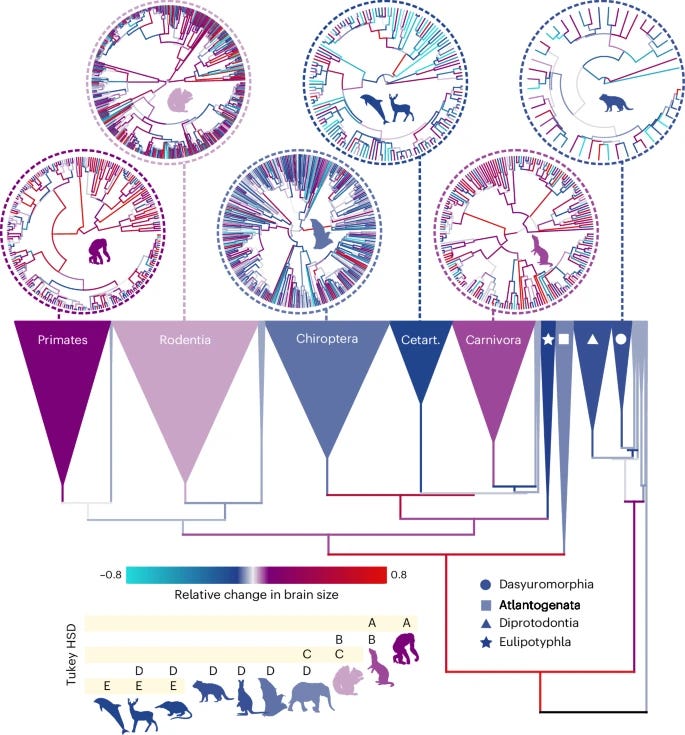

Co-evolutionary dynamics of mammalian brain and body size

Despite decades of comparative studies, puzzling aspects of the relationship between mammalian brain and body mass continue to defy satisfactory explanation. Here we show that several such aspects arise from routinely fitting log-linear models to the data: the correlated evolution of brain and body mass is in fact log-curvilinear. This simultaneously accounts for several phenomena for which diverse biological explanations have been proposed, notably variability in scaling coefficients across clades, low encephalization in larger species and the so-called taxon-level problem. Our model implies a need to revisit previous findings about relative brain mass. Accounting for the true scaling relationship, we document dramatically varying rates of relative brain mass evolution across the mammalian phylogeny, and we resolve the question of whether there is an overall trend for brain mass to increase through time. We find a trend in only three mammalian orders, which is by far the strongest in primates, setting the stage for the uniquely rapid directional increase ultimately producing the computational powers of the human brain.

Biological Systems

Rage against the what? The machine metaphor in biology

Machine metaphors abound in life sciences: animals as automata, mitochondria as engines, brains as computers. Philosophers have criticized machine metaphors for implying that life functions mechanically, misleading research. This approach misses a crucial point in applying machine metaphors to biological phenomena: their reciprocity. Analogical modeling of machines and biological entities is not a one-way street where our understanding of biology must obey a mechanical conception of machines. While our understanding of biological phenomena undoubtedly has been shaped by machine metaphors, the resulting insights have likewise altered our understanding of what machines are and what they can do.

Origin of life

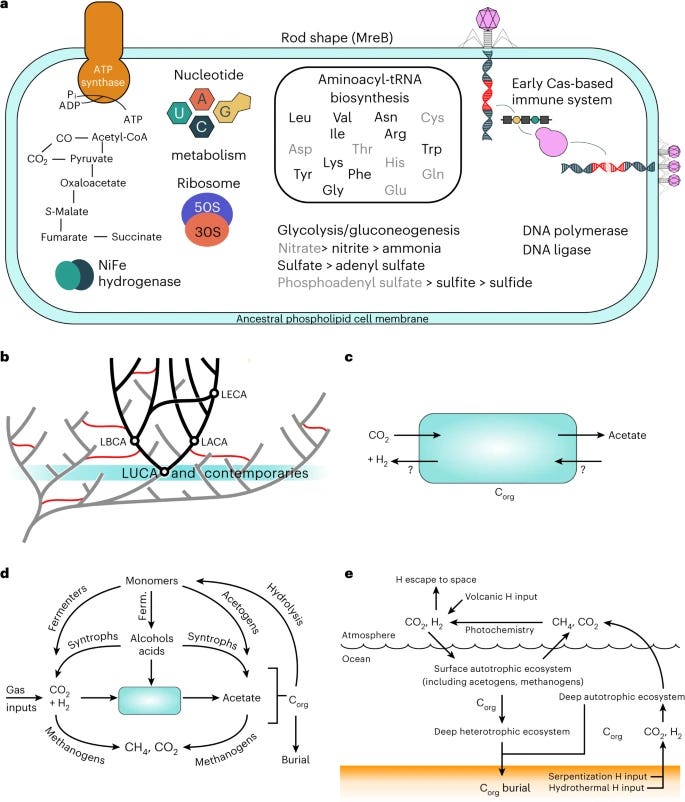

The nature of the last universal common ancestor and its impact on the early Earth system

The concept of a "common ancestor" that connects all living organisms is a cornerstone of evolutionary biology, and this paper sheds new light on this ancient progenitor, that is usually referred to as the last universal common ancestor, or LUCA.

Indeed, LUCA is the oldest node that can be reconstructed using phylogenetic methods.

In this paper, the authors used analyzed the genetics of 700 modern microbes and found that LUCA existed around 4.2 billion years ago (4.09–4.33 Ga). Why that’s cool? For several reasons!

A commonly referenced date for LUCA was about 3.8 billion years ago: therefore we now know that complex life on Earth is older than previously thought.

The study suggests that LUCA was not a simple organism but rather had a complex, well-developed cellular structure and metabolic capabilities, challenging the idea that early life forms were overly simplistic. With its 2,600 proteins and sophisticated metabolic processes, it’s clear that LUCA had significant evolutionary developments much earlier than previously thought.

LUCA had a defense system against viruses, and was likely part of an established ecological system.

I also recommend to read this coverage from Science.

The nature of the last universal common ancestor (LUCA), its age and its impact on the Earth system have been the subject of vigorous debate across diverse disciplines, often based on disparate data and methods. Age estimates for LUCA are usually based on the fossil record, varying with every reinterpretation. The nature of LUCA’s metabolism has proven equally contentious, with some attributing all core metabolisms to LUCA, whereas others reconstruct a simpler life form dependent on geochemistry. Here we infer that LUCA lived ~4.2 Ga (4.09–4.33 Ga) through divergence time analysis of pre-LUCA gene duplicates, calibrated using microbial fossils and isotope records under a new cross-bracing implementation. Phylogenetic reconciliation suggests that LUCA had a genome of at least 2.5 Mb (2.49–2.99 Mb), encoding around 2,600 proteins, comparable to modern prokaryotes. Our results suggest LUCA was a prokaryote-grade anaerobic acetogen that possessed an early immune system. Although LUCA is sometimes perceived as living in isolation, we infer LUCA to have been part of an established ecological system. The metabolism of LUCA would have provided a niche for other microbial community members and hydrogen recycling by atmospheric photochemistry could have supported a modestly productive early ecosystem.

Bio-inspired computing

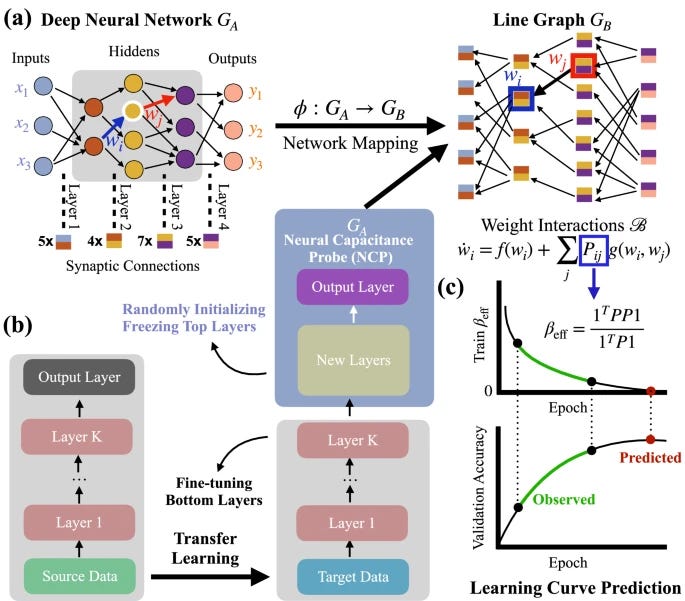

Network properties determine neural network performance

Machine learning influences numerous aspects of modern society, empowers new technologies, from Alphago to ChatGPT, and increasingly materializes in consumer products such as smartphones and self-driving cars. Despite the vital role and broad applications of artificial neural networks, we lack systematic approaches, such as network science, to understand their underlying mechanism. The difficulty is rooted in many possible model configurations, each with different hyper-parameters and weighted architectures determined by noisy data. We bridge the gap by developing a mathematical framework that maps the neural network’s performance to the network characters of the line graph governed by the edge dynamics of stochastic gradient descent differential equations. This framework enables us to derive a neural capacitance metric to universally capture a model’s generalization capability on a downstream task and predict model performance using only early training results. The numerical results on 17 pre-trained ImageNet models across five benchmark datasets and one NAS benchmark indicate that our neural capacitance metric is a powerful indicator for model selection based only on early training results and is more efficient than state-of-the-art methods.

This is my favourite newsletter. Keep up the great work