Complexity Thoughts: Issue #73

Unraveling complexity: building knowledge, one paper at a time

If you find value in #ComplexityThoughts, consider helping it grow by subscribing and sharing it with friends, colleagues or on social media. Your support makes a real difference.

→ Don’t miss the podcast version of this post: click on “Spotify/Apple Podcast” above!

From the Lab

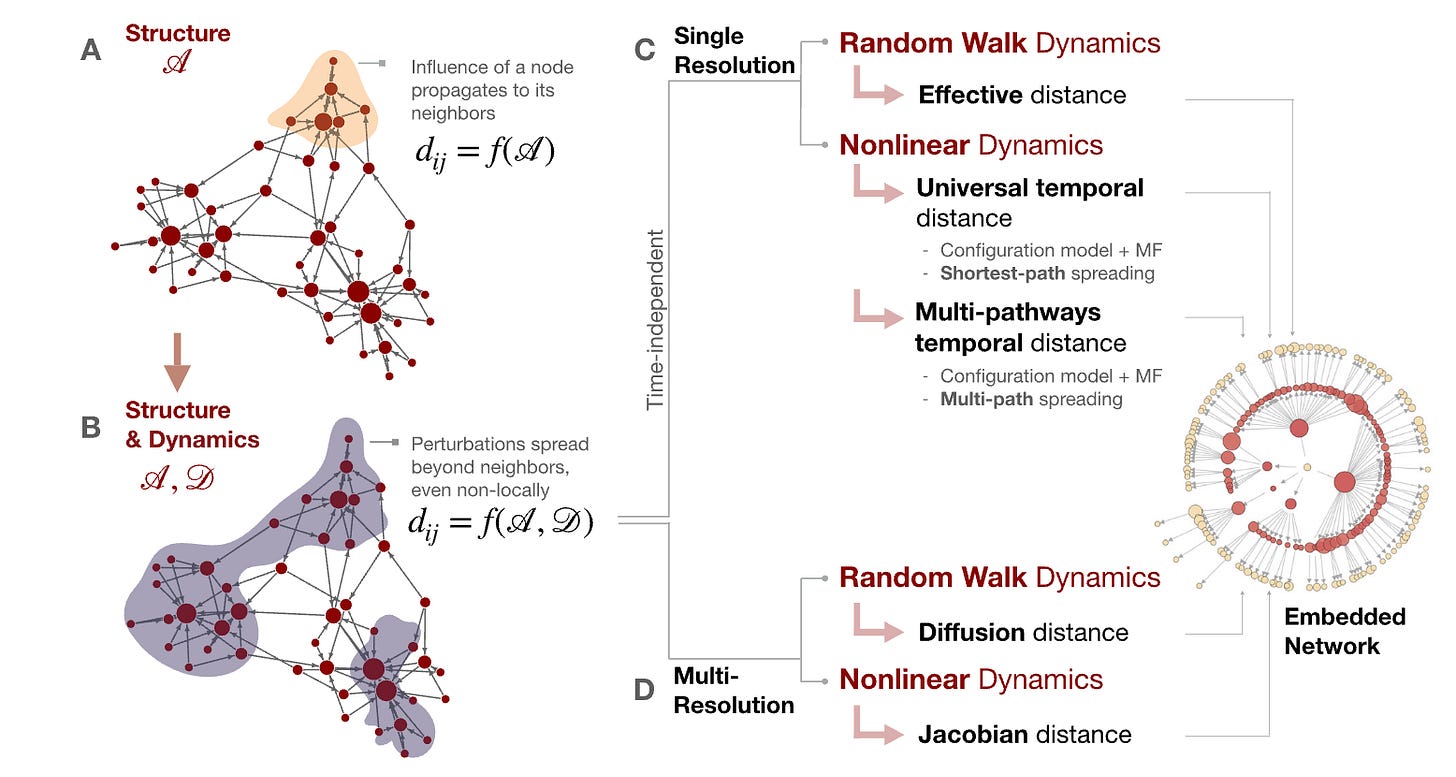

Latent geometry emerging from network-driven processes

It’s more than a decade that our community started to characterize the geometric signatures of network dynamics.

Beyond offering elegant mathematical tools, this geometric formalism provides a unifying lens to interpret how complex systems function, turning high-dimensional heterogeneous interactions into interpretable geometric structure and enabling more reliable prediction, control and comparison of network function across domains.

By reconstructing latent spaces directly from dynamics, we gain access to the mesoscale principles that govern information flow in biological, social and technological networks, e.g., clarifying why functional brain modules integrate as observed, how ecosystems respond to perturbations or how contagion fronts propagate. An exciting developing branch of network science!

Understanding network functionality requires integrating structure and dynamics, and emergent latent geometry induced by network-driven processes captures the low-dimensional spaces governing this interplay. In this Perspective, we review generative-model-based approaches, distinguishing two reconstruction classes: fixed-time methods, which infer geometry at specific temporal scales (e.g., equilibrium), and multi-resolution methods, which integrate dynamics across near- and far-from-equilibrium states. Over the past decade, these models have revealed functional organization in biological, social, and technological networks. Hence, we provide a unified overview of these methods, with particular attention to the underlying mathematical constructions. Further, we point to promising extensions of these frameworks, which combine the previously developed methods to other well-established analytical frameworks.

Foundations of network science and complex systems

Self-Reinforcing Cascades: A Spreading Model for Beliefs or Products of Varying Intensity or Quality

Models of how things spread often assume that transmission mechanisms are fixed over time. However, social contagions—the spread of ideas, beliefs, innovations—can lose or gain in momentum as they spread: ideas can get reinforced, beliefs strengthened, products refined. We study the impacts of such self-reinforcement mechanisms in cascade dynamics. We use different mathematical modeling techniques to capture the recursive, yet changing nature of the process. We find a critical regime with a range of power-law cascade size distributions with nonuniversal scaling exponents. This regime clashes with classic models, where criticality requires fine-tuning at a precise critical point. Self-reinforced cascades produce critical-like behavior over a wide range of parameters, which may help explain the ubiquity of power-law distributions in empirical social data.

Urban Systems

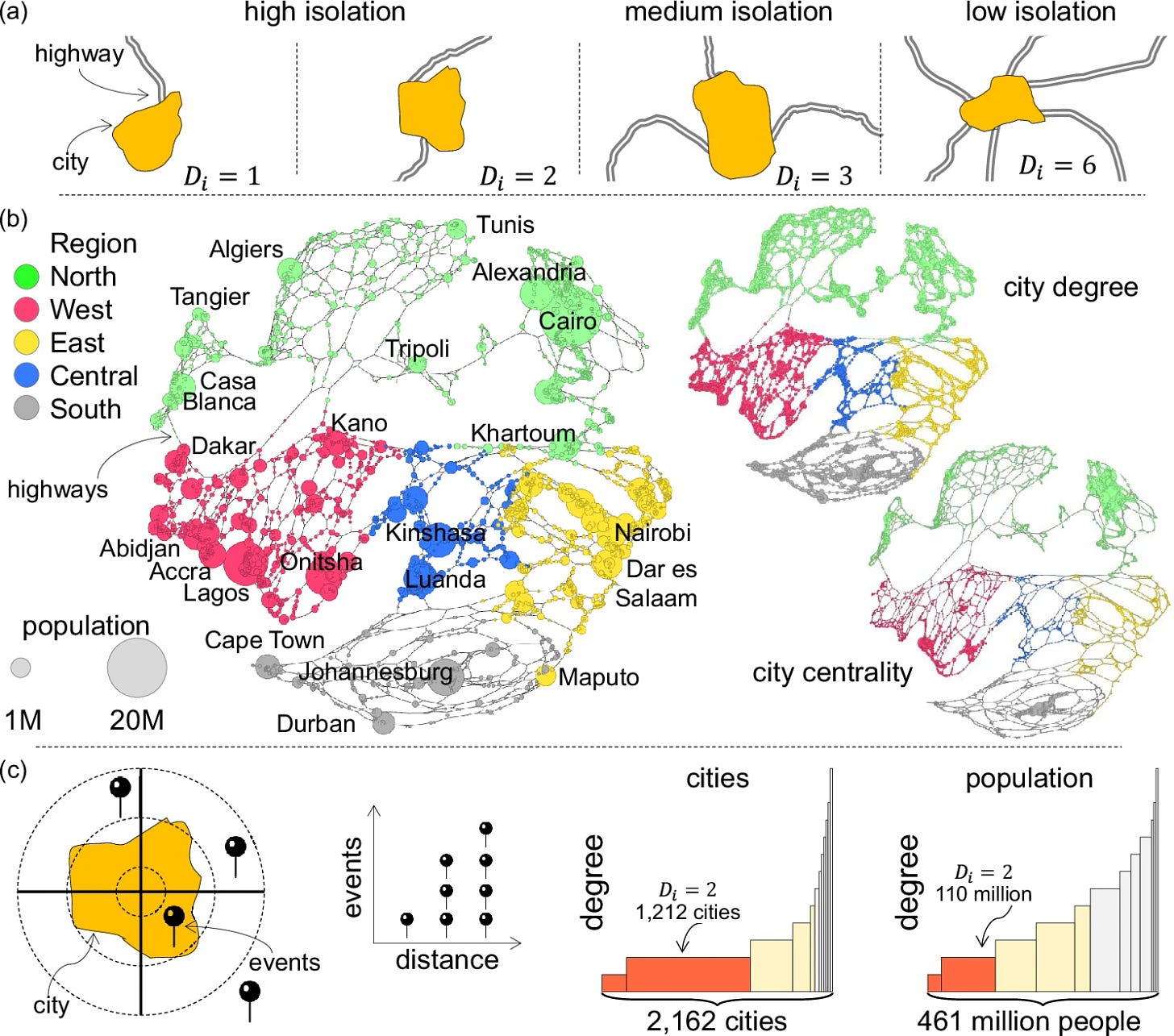

Violence, city size and geographical isolation in African cities

Different types of violence are commonly linked with large urban areas, often presumed to scale superlinearly with population size (i.e., to be disproportionately higher in larger cities). This study explores the hypothesis that smaller, isolated cities in Africa may experience a heightened intensity of violence against civilians. It aims to investigate the correlation between the risk of experiencing violence, a city’s size and its geographical isolation. Between 2000 and 2023, incidents of civilian casualties were analysed to assess lethality in relation to varying levels of isolation and city size. African cities are categorised by isolation (measured by the number of highway connections) and centrality (the estimated frequency of journeys). We show that violence against civilians exhibits a sublinear pattern, with larger cities witnessing fewer events and casualties per 100,000 inhabitants. Individuals in isolated cities face a fourfold higher risk of becoming casualties compared with those in more connected cities.

Universal Model of Urban Street Networks

Analyzing 9000 urban areas’ street networks, we identify properties, including extreme betweenness centrality heterogeneity, that typical spatial network models fail to explain. Accordingly we propose a universal, parsimonious, generative model based on a two-step mechanism that begins with a spanning tree as a backbone then iteratively adds edges to match empirical degree distributions. Controlled by a single parameter representing lattice-equivalent node density, it accurately reproduces key universal properties to bridge the gap between empirical observations and generative models.

Universal Roughness and the Dynamics of Urban Expansion

Urban sprawl reshapes cities, yet its quantitative laws remain elusive. Analyzing built-up expansion in 19 cities (1985–2015) with tools from surface growth physics in radial geometry, we reveal anisotropic, branchlike growth and a piecewise linear scaling between area and population. We uncover a robust local roughness exponent 𝛼loc≈0.54, coexisting with variable 𝛽 and 𝑧. This unusual coexistence of universal and variable exponents offers a rare empirical test bed for nonequilibrium growth and an empirical basis for modeling urban sprawl.

Biological Systems

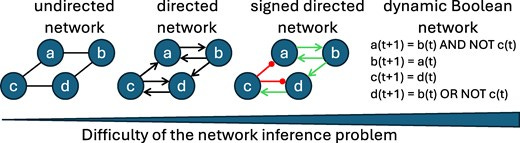

The inference of gene regulatory networks (GRNs) from high-throughput data constitutes a fundamental and challenging task in systems biology. Boolean networks are a popular modeling framework to understand the dynamic nature of GRNs. In the absence of reliable methods to infer the regulatory logic of Boolean GRN models, researchers frequently assume threshold logic as a default. Using the largest repository of published expert-curated Boolean GRN models as best proxy of reality, we systematically compare the ability of two popular threshold formalisms, the Ising and the 01 formalism, to truthfully recover biological functions and biological system dynamics. While Ising rules match fewer biological functions exactly than 01 rules, they yield a better average agreement. In general, more complex regulatory logic proves harder to be represented by either threshold formalism. Informed by these results and a meta-analysis of regulatory logic, we propose modified versions for both formalisms, which provide a better function-level and dynamic agreement with biological GRN models than the usual threshold formalisms. For small biological GRN models with low connectivity, corresponding threshold networks exhibit similar dynamics. However, they generally fail to recover the dynamics of large networks or highly connected networks. In conclusion, this study provides new insights into an important question in computational systems biology: how truthfully do Boolean threshold networks capture the dynamics of GRNs?

Human behavior

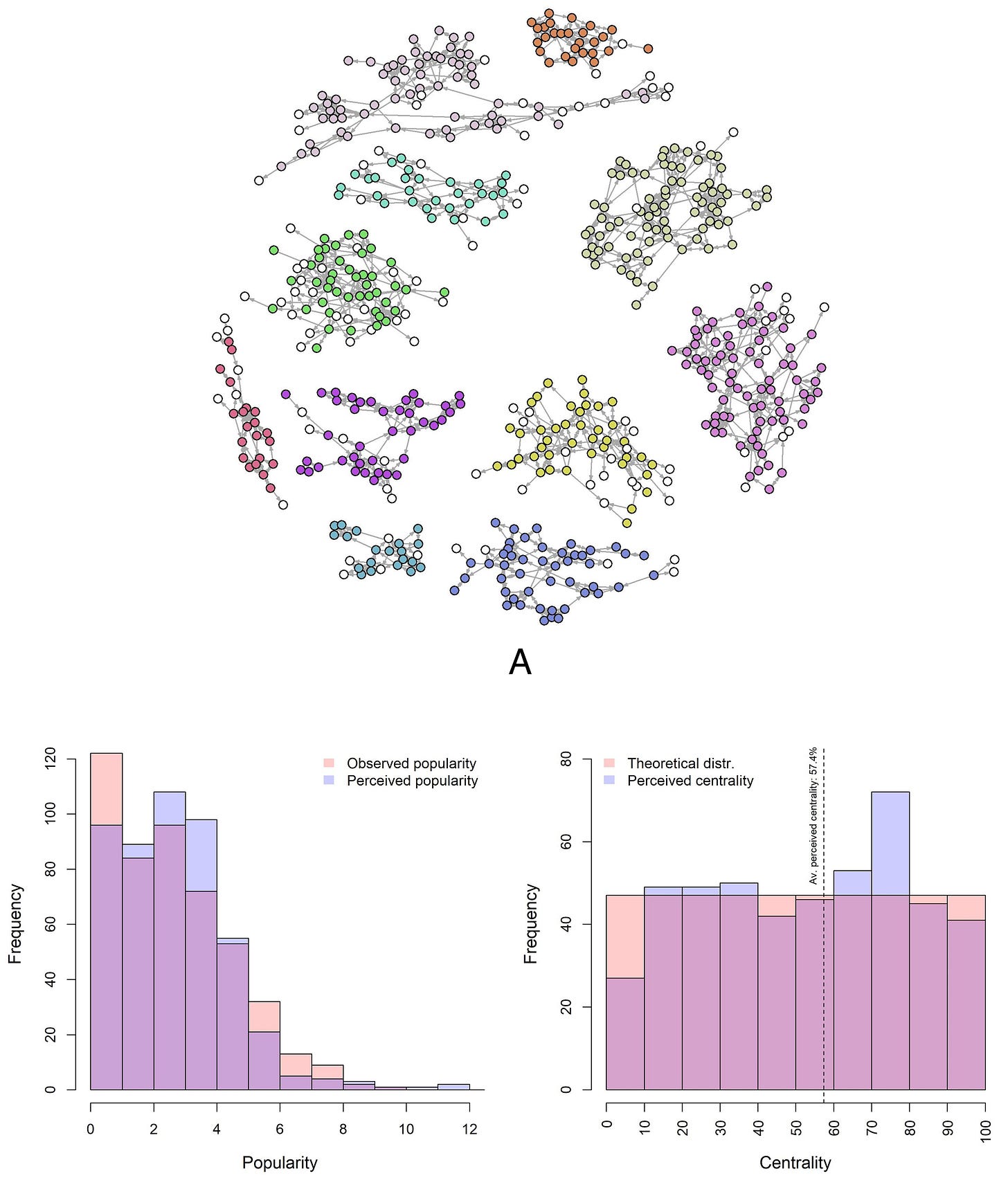

Perception of own centrality in social networks

People’s success in social and professional settings depends on understanding their own and others’ roles in social networks, while accurate network perception helps target key individuals more effectively in public health, education, and organizations, strengthening trust and collaboration. We find that individuals systematically misperceive their popularity and centrality as well as that of others, an observation that is not explained by the intricacies of network structure. Importantly, these misperceptions predict academic outcomes, demonstrating their real-life impact beyond social life. Our findings suggest that boosting individuals’ understanding of social structure can support both academic achievement and stronger, more connected communities, offering pathways for interventions aimed at improving group dynamics and social inclusion.

This study explores how individuals perceive their social networks, with a focus on their own positioning. Using experimental methods and network analysis, we show that people have a limited understanding of their social standing in terms of popularity (in-degree) and centrality. Few participants accurately estimate their popularity, and even fewer correctly identify their decile of centrality. A similar pattern emerges for their perceptions of the most popular and central individuals, but we find no correlation between the ability to assess one’s own position and the ability to detect key network members. Popular participants correctly perceive themselves as more popular, although they tend to misjudge their popularity more than less popular peers. They are nonetheless more accurate in estimating their centrality. Perceived centrality is only weakly correlated with actual centrality, but central individuals misperceive both their popularity and centrality to a greater extent. We further show that these misperceptions have real-world implications. Conditional on network positioning, students who see themselves as less popular and less central–and those with more accurate self-perceptions–tend to achieve higher grades, whereas individuals recognized by others as popular and central perform significantly better academically. These findings challenge theoretical models that assume accurate self-awareness of network positions and highlight the need to reconsider the implications for key-player interventions in public health, education, and organizational contexts.

The nonlinear feedback dynamics of asymmetric political polarization

Political polarization threatens democracy in America. This article helps illuminate what drives it, as well as what factors account for its asymmetric nature. In particular, we focus on positive feedback among members of Congress as the key mechanism of polarization. We show how public opinion, which responds to the laws legislators make, in turn drives the feedback dynamics of political elites. Specifically, we find that voters’ “policy mood,” i.e., whether public opinion leans in a more liberal or conservative direction, drives asymmetries in elite polarization over time. Our model also demonstrates that once self-reinforcing processes among elites reach a critical threshold, polarization rapidly accelerates. By tying together elite and voter dynamics, this paper presents a unified theory of political polarization

Using a general model of opinion dynamics, we conduct a systematic investigation of key mechanisms driving elite polarization in the United States. We demonstrate that the self-reinforcing nature of elite-level processes can explain this polarization, with voter preferences accounting for its asymmetric nature. Our analysis suggests that subtle differences in the frequency and amplitude with which public opinion shifts left and right over time may have a differential effect on the self-reinforcing processes of elites, causing Republicans to polarize more quickly than Democrats. We find that as self-reinforcement approaches a critical threshold, polarization speeds up. Republicans appear to have crossed that threshold while Democrats are currently approaching it.

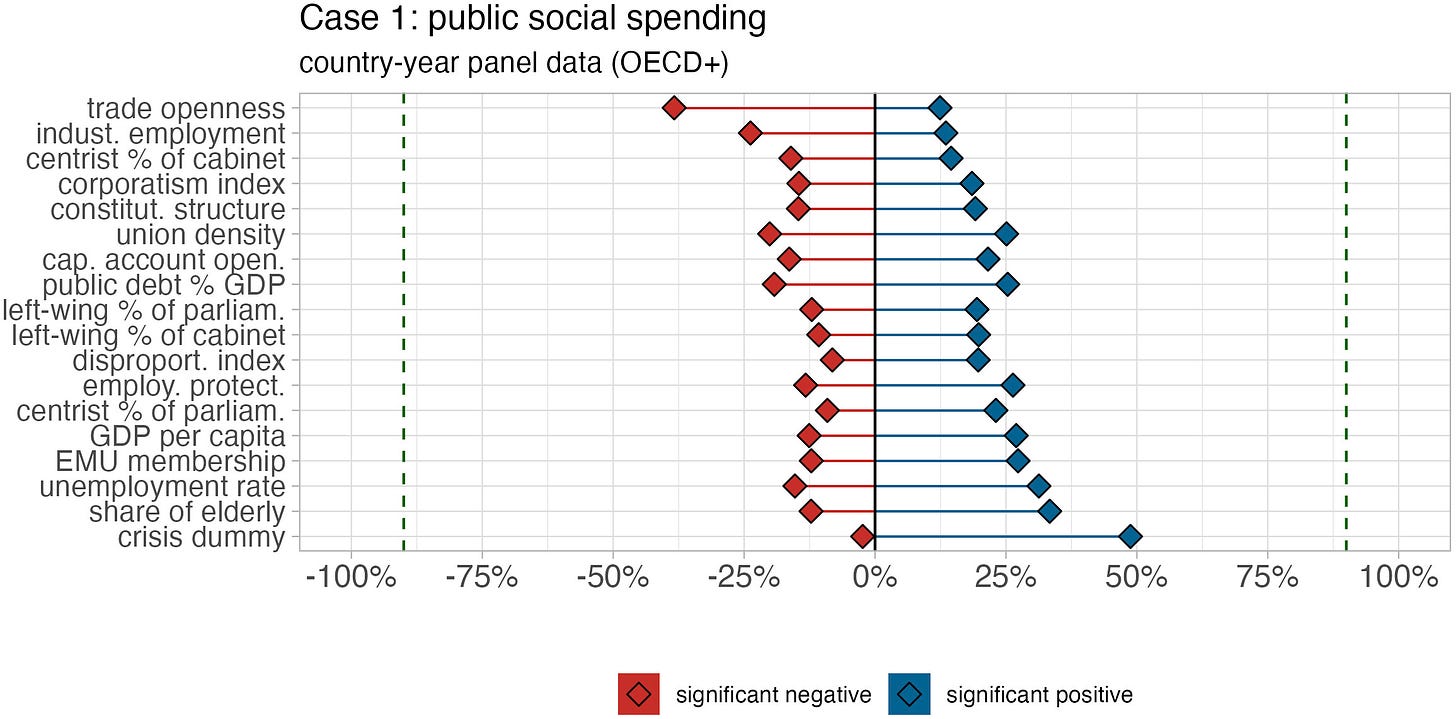

Estimating the extent and sources of model uncertainty in political science

Assessing model uncertainty is crucial to quantitative political science. Yet, most available sensitivity analyses focus only on a few modeling choices, most notably the covariate space, while neglecting to jointly consider several equally important modeling choices simultaneously. In this article, we combine the exhaustive and systematic method of the Extreme Bounds Analysis with the more multidimensional logic underpinning the multiverse approach to develop an approach to sensitivity analyses. This allows us to systematically assess the degree and sources of model uncertainty across multiple dimensions, including the control set, fixed effect structures, SE types, sample selection, and dependent variable operationalization. We then apply this method to four prominent topics in political science: democratization, institutional trust, public good provision, and welfare state generosity. Results from over 3.6 bn estimates reveal widespread model uncertainty, not just in terms of the statistical significance of the effects, but also their direction, with most independent variables yielding a substantive share of statistically significant positive and negative coefficients depending on model specification. We compare the strengths and weaknesses of three distinct approaches to estimating the relative importance of different model specification choices: nearest 1-neighbor; logistic; and deep learning. All three approaches reveal that the impact of the covariate space is relatively modest compared to the impact of sample selection and dependent variable operationalization. We conclude that model uncertainty stems more from sampling and measurement than conditioning and discuss the methodological implications for how to assess model uncertainty in the social sciences.

I recommend reading also the following paper in a row: Robustness is better assessed with a few thoughtful models than with billions of regressions

I argue that their approach substantially overstates uncertainty. Multiverse analyses are only informative when all included models represent equally plausible strategies for estimating the same estimand. Otherwise, including unjustified models—i.e., model specifications that are clearly inferior to alternatives—produces a mass of incommensurable and unreasonable effect size estimates

Bio-inspired computing

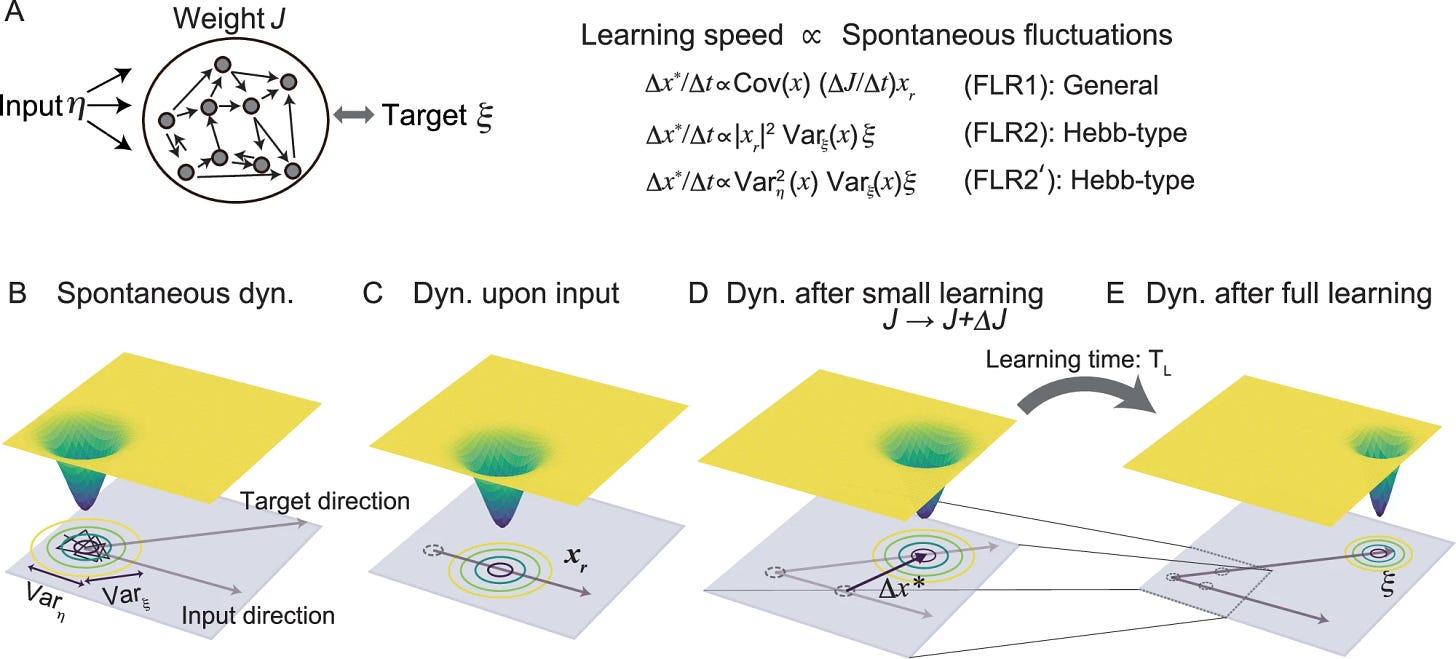

Fluctuation-learning relationship in recurrent neural networks

Learning speed depends on both task structure and neural dynamics prior to learning, yet a theory connecting them has been missing. Inspired by the fluctuation-response relation, we derive two formulae linking neural dynamics to learning. Initial learning speed is proportional to the covariance between pre-learning spontaneous activity and network’s input-evoked response, independent of the learning rule. For Hebb-type learning, initial speed scales with the variance of activity along target and input directions. These results apply across tasks including input-output mapping and time-series generation. Numerical simulations across diverse models validate the formulae beyond the theoretical-derivation’s assumptions. Although derived for early learning, the formulae predict total learning time. A straightforward implication is learning is faster when task-relevant directions align with high-variance spontaneous activities, consistent with empirical findings. Our framework establishes how the geometrical relationship between pre-learning dynamics and task directions governs learning speed, independent of details of tasks.

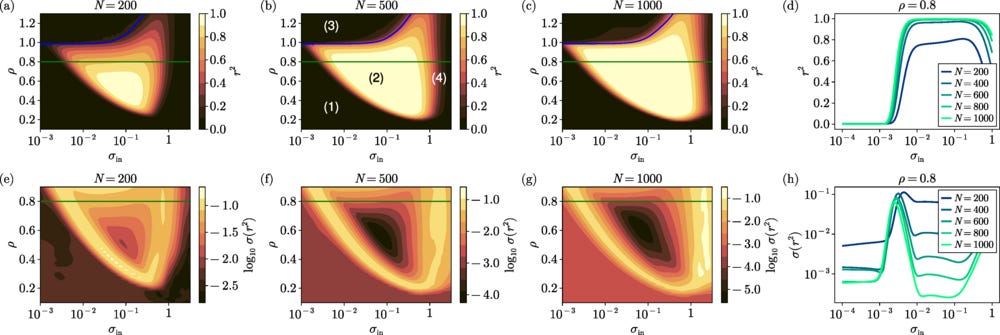

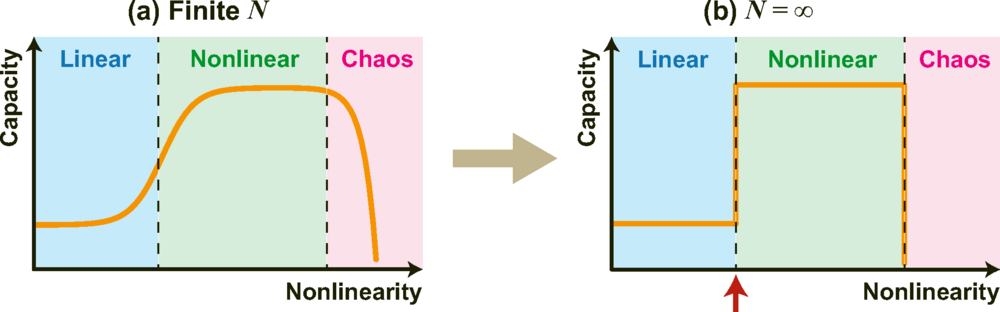

Phase transitions from linear to nonlinear information processing in neural networks

We investigate a phase transition from linear to nonlinear information processing in echo state networks, a widely used framework in reservoir computing. The network consists of randomly connected recurrent nodes perturbed by a noise and the output is obtained through linear regression on the network states. By varying the standard deviation of the input weights, we systematically control the nonlinearity of the network. For small input standard deviations, the network operates in an approximately linear regime, resulting in limited information processing capacity. However, beyond a critical threshold, the capacity increases rapidly, and this increase becomes sharper as the network size grows. Our results indicate the presence of a discontinuous transition in the limit of infinitely many nodes. This transition is fundamentally different from the conventional order-to-chaos transition in neural networks, which typically leads to a loss of long-term predictability and a decline in the information processing capacity. Furthermore, we establish a scaling law relating the critical nonlinearity to the noise intensity, which implies that the critical nonlinearity vanishes in the absence of noise.

Artificial Intelligence

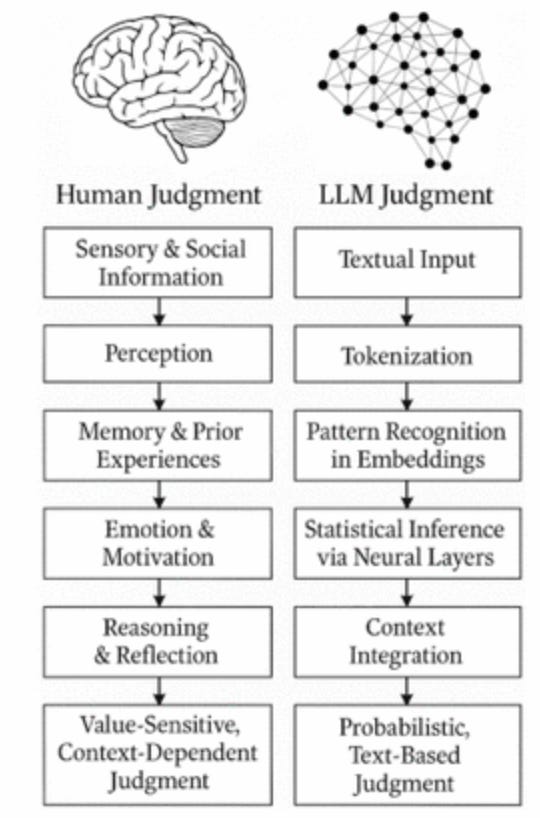

The simulation of judgment in LLMs

See also this Commentary.

Large Language Models (LLMs) are used in evaluative tasks across domains. Yet, what appears as alignment with human or expert judgments may conceal a deeper shift in how “judgment” itself is operationalized. Using news outlets as a controlled benchmark, we compare six LLMs to expert ratings and human evaluations under an identical, structured framework. While models often match expert outputs, our results suggest that they may rely on lexical associations and statistical priors rather than contextual reasoning or normative criteria. We term this divergence epistemia: the illusion of knowledge emerging when surface plausibility replaces verification. Our findings suggest not only performance asymmetries but also a shift in the heuristics underlying evaluative processes, raising fundamental questions about delegating judgment to LLMs.

Large Language Models (LLMs) are increasingly embedded in evaluative processes, from information filtering to assessing and addressing knowledge gaps through explanation and credibility judgments. This raises the need to examine how such evaluations are built, what assumptions they rely on, and how their strategies diverge from those of humans. We benchmark six LLMs against expert ratings—NewsGuard and Media Bias/Fact Check—and against human judgments collected through a controlled experiment. We use news domains purely as a controlled benchmark for evaluative tasks, focusing on the underlying mechanisms rather than on news classification per se. To enable direct comparison, we implement a structured agentic framework in which both models and nonexpert participants follow the same evaluation procedure: selecting criteria, retrieving content, and producing justifications. Despite output alignment, our findings show consistent differences in the observable criteria guiding model evaluations, suggesting that lexical associations and statistical priors could influence evaluations in ways that differ from contextual reasoning. This reliance is associated with systematic effects: political asymmetries and a tendency to confuse linguistic form with epistemic reliability—a dynamic we term epistemia, the illusion of knowledge that emerges when surface plausibility replaces verification. Indeed, delegating judgment to such systems may affect the heuristics underlying evaluative processes, suggesting a shift from normative reasoning toward pattern-based approximation and raising open questions about the role of LLMs in evaluative processes.

→ Please, remind that if you find value in #ComplexityThoughts, you might consider helping it grow by subscribing, or by sharing it with friends, colleagues or on social media. See also this post to learn more about this space.

Phenomenal curation, especially the latent geometry piece linking structure and dynamics in networks. The insight about emergent geometry revealing mesoscale principles across domains is powerful becuz it gives us a unified lens where we've had fragmented approaches. I've been grappling with similar questions in transport networks where static topology dosen't predict flow behavior at all. The multi-resolution reconstruction methods sound like exactly the conceptual bridge needed there.